Selenium Webscraping in Azure Functions

Since Azure released custom containers in Azure Functions, I've been interested in a real use case for utilizing them. Recently, I came across a need to perform some webscraping using Selenium to issue search queries to Google and Bing and navigate to a desired site.

This type of work lends itself to a timer triggered Azure Function for cost effeciveness over scalability. However, Selenium requires a headless browser to be available in order to perform the site navigation. The built-in Azure Functions do not accommodate this requirement. Enter a custom container.

Running the Azure Functions base image and adding the Chrome binary allows us the ability to take advantage of Functions serverless components while supporting non-standard libraries like Chrome and Selenium.

This post is not meant to be a soup-to-nuts beginners how-to guide, but highlights what I find as interesting lessons learned in establishing an end-to-end project running containers on Azure Functions.

Source code can be found at https://github.com/gantta/seo-bot-docker

Prerequesites:

Build and Test Locally

For this project, I'm using VS Code to build and test locally on my machine. Dotnet build, restore, and run tasks are defined in the .vscode\tasks.json file. When running locally on my Windows OS, we are looking for a local chromedriver.exe installed as part of the Selenium package. When we go to containerize the application, we will install the Linux chromedriver binaries in the image.

In order to differentiate between the two operating systems, we have a setting in the local.settings.json file that defines the environment; local or dev.

Setting this variable to local will enable the Windows chrome driver. Any other value will assume running in Linux environment.

Note: You will also need an existing Azure Storage Account created and configured in the

> local.settings.json> if you plan to run the application locally before deploying the Terraform code.

Terraform

Terraform allows the ability to define our desired resources and keep that configuration, as well as subsequent configuration changes, under source control alongside the application code. Infrastructure as code also provides repeatability and prevents configuration drift through your environments.

Terraform manages representation of the desired resources in a state file. In my case, I want the ability to run my Terraform code locally as well as through my DevOps pipelines without conficts, so I'm using a shared state file. Details of remote state files can be found here, but I'm using a dedicated Azure Storage Account to hold all project state files.

In order to provide permissions for the Terraform CLI to interact with my Azure Subscription, and to not store secrets of my Azure Service Principal in the reposity, I'm using Terraform Service Principal authentication and passing in details of the Service Principal through environment variables. Setup information is here.

Inspecting the Terraform\main.tf file, we setup the required Resource Group and Storage Account required for a Function App. I am also inluding an App Insights resource in order to provide ongoing monitoring of my application.

As of this post, Azure currently supports custom containers only on the a Linux App Service Plans, running on the Premium tier. This is defined in the following attributes of the azurerm_app_service_plan block:

...

kind = "Linux"

reserved = true

sku {

tier = "PremiumV2"

size = "P1v2"

}

...Note: When

> kind = "Linux"> , you must set the attribute> reserved = true

And the pertinent attributes of the azurerm_function_app block are as follows:

...

os_type = "linux"

version = "~3"

...Part of my architecture is the use of Azure Container Registry to store the image for my application. The azurerm_container_registry block is pretty straightforward, but the interesting pieces lie within the app_settings and the site_config blocks on the function app:

...

app_settings = {

APPINSIGHTS_INSTRUMENTATIONKEY = azurerm_application_insights.main.instrumentation_key

DOCKER_REGISTRY_SERVER_URL = azurerm_container_registry.main.login_server

DOCKER_REGISTRY_SERVER_USERNAME = azurerm_container_registry.main.admin_username

DOCKER_REGISTRY_SERVER_PASSWORD = azurerm_container_registry.main.admin_password

ENVIRONMENT = var.environment

WEBSITE_ENABLE_SYNC_UPDATE_SITE = true

WEBSITE_RUN_FROM_PACKAGE = 1

WEBSITES_ENABLE_APP_SERVICE_STORAGE = false

}

site_config {

always_on = true

use_32_bit_worker_process = false

linux_fx_version = "DOCKER|$

...The DOCKER_REGISTRY_* settings don't need much explanation, but I found the WEBSITES_ENABLE_APP_SERVICE_STORAGE = false setting required as well all three attributes in the site_config block.

Docker

The base Dockerfile comes from the initial project setup, running func init --docker. Using the azure-functions/dotnet:3.0 as a base image for running our functions, and using dotnet/core/sdk:3.1 for building the application, I lastly perform the Chrome Driver into the final image to add the required libraries in order to run the Selenium drivers.

Unfortunately, I have some image bloat with running the Chrome driver install as part of the final image, but I wasn't able to get the Chrome driver to run successfully in the image due to missing dependecy libraries when I try to copy the /usr/bin/chromedriver file directly between image layers.

RUN apt-get update && \

apt-get install -y gnupg wget curl unzip --no-install-recommends && \

wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | apt-key add - && \

echo "deb http://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google.list && \

apt-get update -y && \

apt-get install -y google-chrome-stable --no-install-recommends && \

rm -rf /var/lib/apt/lists/* && \

CHROMEVER=$(google-chrome --product-version | grep -o "[^.]*.[^.]*.[^.]*" | sed 1q) && \

DRIVERVER=$(curl -s "https://chromedriver.storage.googleapis.com/LATEST_RELEASE_$CHROMEVER") && \

wget -q --continue -P /chromedriver "http://chromedriver.storage.googleapis.com/$DRIVERVER/chromedriver_linux64.zip" && \

unzip /chromedriver/chromedriver* -d /usr/bin/The other callout I'd like to make is the ARG StorageConnectionString argument. This allows me to pass the required Azure Storage Account connection string in my docker build command as opposed to hard coding the value in plain text in my repository.

Executing the docker build and docker run commands allow me the ability to see the application running in a container on my local machine.

Great! Time to push everything to Azure

Azure DevOps

After creating a new project in my Azure DevOps instance, I can setup a new pipeline based on the existing azure-pipelines.yml file. The pipeline has a number of configuration required in establishing service connections and various variables, each described in more detail in the README.md.

There are two main stages in the pipeline:

- Terraform Jobs

- Build and Push Image Jobs

The tasks for the Terraform jobs perform the basic init, plan, and apply Terraform tasks based on the .tf files in the Terraform\ folder. This is where the shared remote state file comes in handy as if I had previously applied my Terraform modules locally prior to running the pipeline, the pipeline won't try to recreate the existing resoures and vice-versa. Which is handy because I'd recommend running the Terraform prior to setting up the pipeline due to the required service connection to your Azure Container Registry, which is created from the Terraform code.

The container jobs make use of built-in Docker@2 task to login to the container registry, and perform the image build and push to the defined repository in my container registry.

Configure and Monitor

If everything in the pipeline is configured correctly, we should end up with a successful deployment of our Azure resources from Terraform, a new image sitting in the ACR repository, and a running Function App waiting for final configuration.

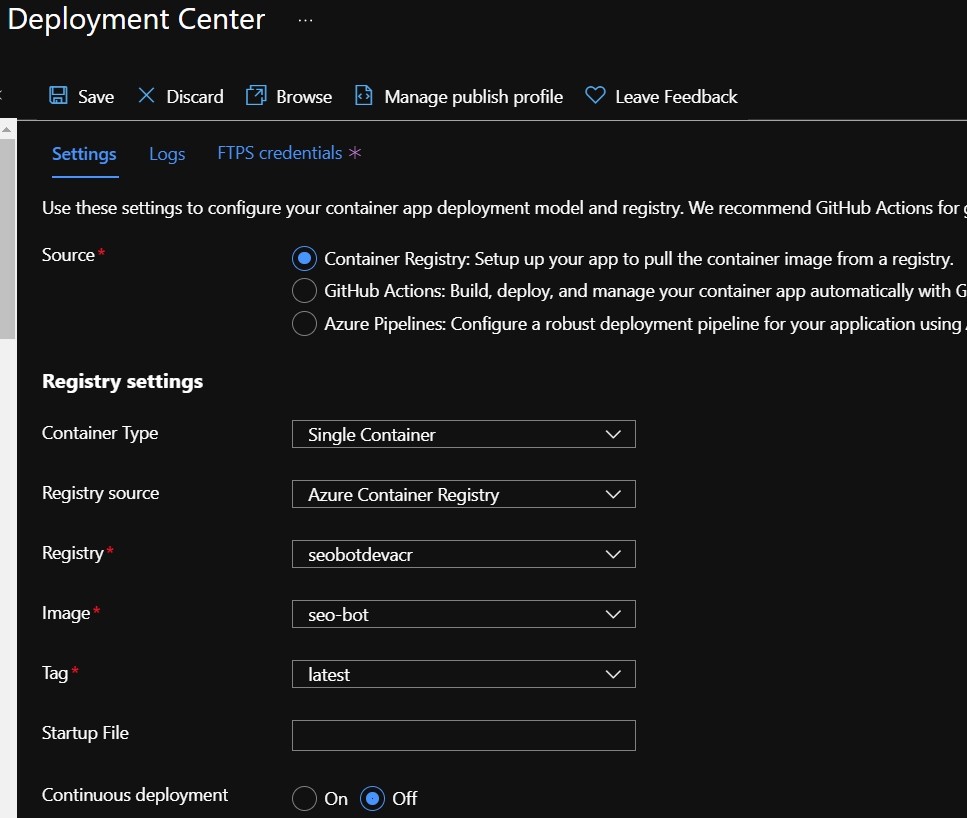

The final configuration step is to tell your Function App where to pull the image from your repository. Navigate to the Function App created by Terraform. From Deployment > Deployment Center, configure the Container Registry settings for a Single Container.

We can perform local development and testing, push code updates to the repository, monitor build activity from Azure DevOps, and monitor the health and activity of our Function App with Application Insights.

With the current version of the app, I can see there is some work to do in making the container image more lightweight as there is a decent amount of bloat in the image. And while reviewing Application Insights performance and failures, plenty of work to iron out the exceptions and memory usage.

But for the point of building an end-to-end containerized application running in a serverless environment, I'm pretty impressed.